Updating or reprocessing data is a big deal. For many companies, the intellectual property which exists in their data a lot of times not only drives the business forward, but it is the business. If that data gets corrupted or worse, lost, then the business can come to an end. When you are working with a lot of data, then I do not think it is a stretch to imagine the alarm bells that ring when someone suggests a mass update or a large scale reprocessing of data. I was giving some blob triggered Azure Function training where I discussed how to retrigger / reprocess a blob. There are two possibilities.

- Update the scanInfo file

- Remove the blob from the azure-webjobs-hosts container which exists in Storage Account configured in your AzureWebJobsStorage application setting

Update the scanInfo file

This one is a bit impactful, but if the code in your Azure Function can ignore duplicates, then it might work. This file contains a datetime stamp of the last time the container has been checked for additions. If a blob exists with a timestamp greater than the one in the scanInfo file, and the blob receipt is not present, then it will get processed. If you change the datetime in the scanInfo file back a few days, then all blobs in that container with a timestamp greater than the new datetime in the scanInfo file, will get reprocessed by the Azure Function. Again, if the blob receipt exists, the blob will NOT be reprocessed. Where is this file?

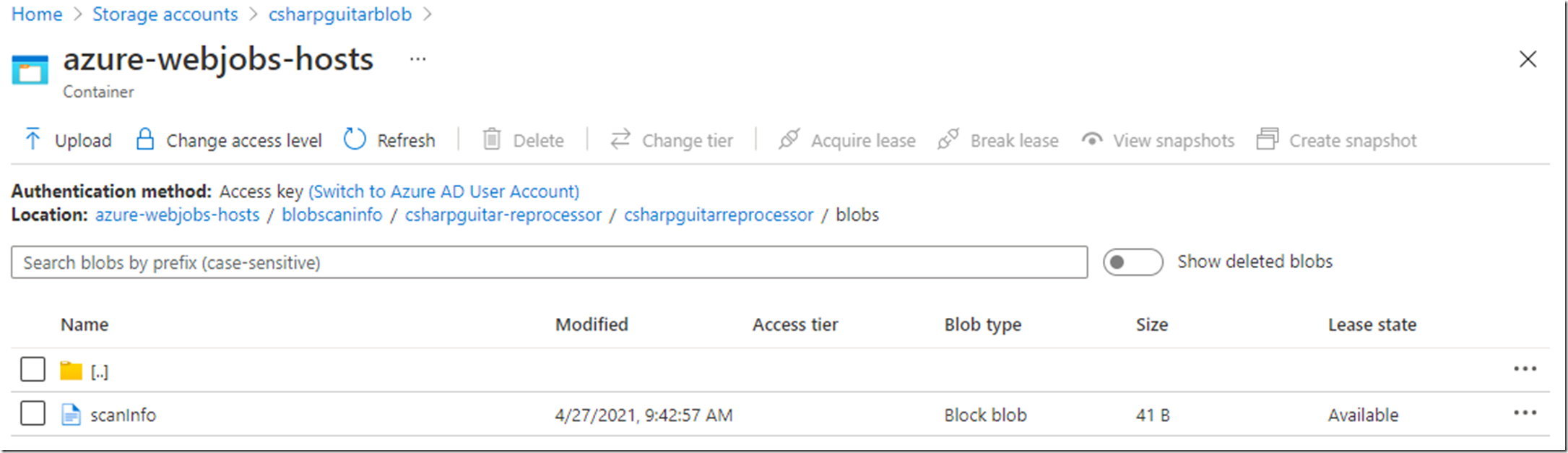

You have an Azure Storage account which exists in the AzureWebJobsStorage application setting. Within that storage account there is a container named azure-webjobs-hosts. In that container there is a path like this, shown in Figure 1:

blobscaninfo/functionAppName/storageAccount/storageContainer –> scanInfo –> {“LatestScan”:”2021-04-27T07:42:55.999Z”}

Figure 1, reprocess a blob triggered Azure Function

But yes, that can be considered a mass update and if your code does not protect you against duplications then you might end up creating a lot of additional work for yourself and possible destroy and corrupt some data. If you have found that just a few blobs are missing, this seems like an over kill, so how can you process only a few?

Reprocess a single blob for a blob triggered Azure Function

Just straight up, it is not so easy, especially if you come from a relational data background where you can run SELECT or UPDATE SQL statements and your done. We are talking about an entire different storage model and query methods. I’ll skip all those complexities and focus directly on the solution.

There are two complexities with this approach. The first one is finding the blob or blobs which were uploaded to the monitored blob container but not processed for some reason. If you are reading this post, then I would assume you have identified that this is the case and would know which blob or groups of blobs were not processed. You can get fancy and write some code to compare two sources of the information or perhaps write some logs in your Run() method which you can use later to compare with an ‘official’ record. I wouldn’t put too much focus on that, instead trust that this is actually a relatively rare occurrence and happens on massive datasets. You can in general trust the platform, but exceptions happen, and when they do, it is good to have the means to resolve any issue which come from it.

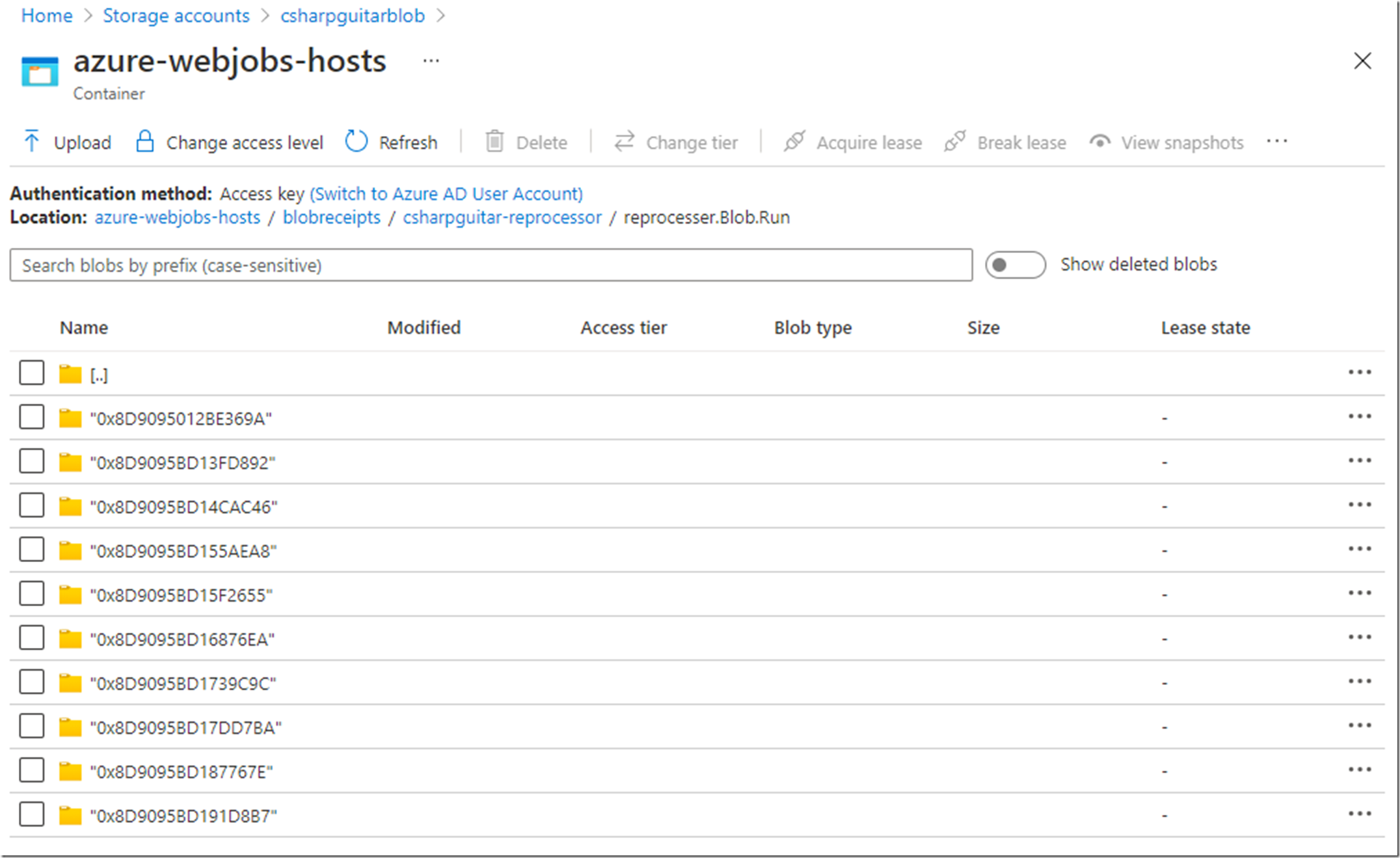

The second complexity is then finding that blob in the blobreceipts/functionAppName/namespace.class.Run directory. This exists in the same storage account and container as the one for scanInfo. Below the namespace.class.Run folder is a rather large, folder based storage structure, as shown in Figure 2. Each one of those folder contains a log of a single blob, you need to find that blob and delete it in order for the Azure Function to be triggered and reprocess that blob. This might be easy if you have 5 blobs, but not if you have over a few hundred, much less thousands.

We should be thankful for the Azure Storage SDK libraries. This is the toolset to use to perform this task. Luckily, I wrote the code here and you can download the compiled program here. NOTE: this is not supported by anyone other than myself and is mostly intended as a proof of concept, feel free to take the code and make it better.

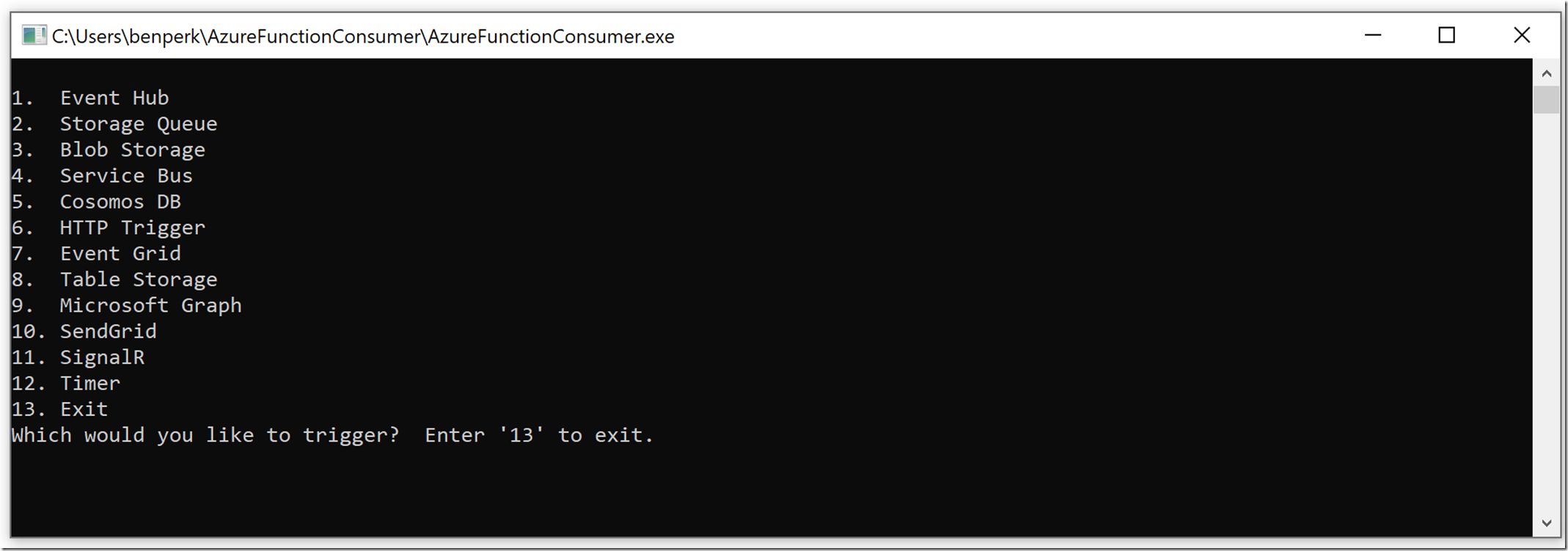

I enhanced the tool, Azure Function Consumer, to search specific directories for the existing blobs based on given timeframe. This tool, as seen in Figure 3, can also sent messages and files to numerous other Azure products which Azure Functions can be triggered by.

Figure 3, reprocess a blob triggered Azure Function

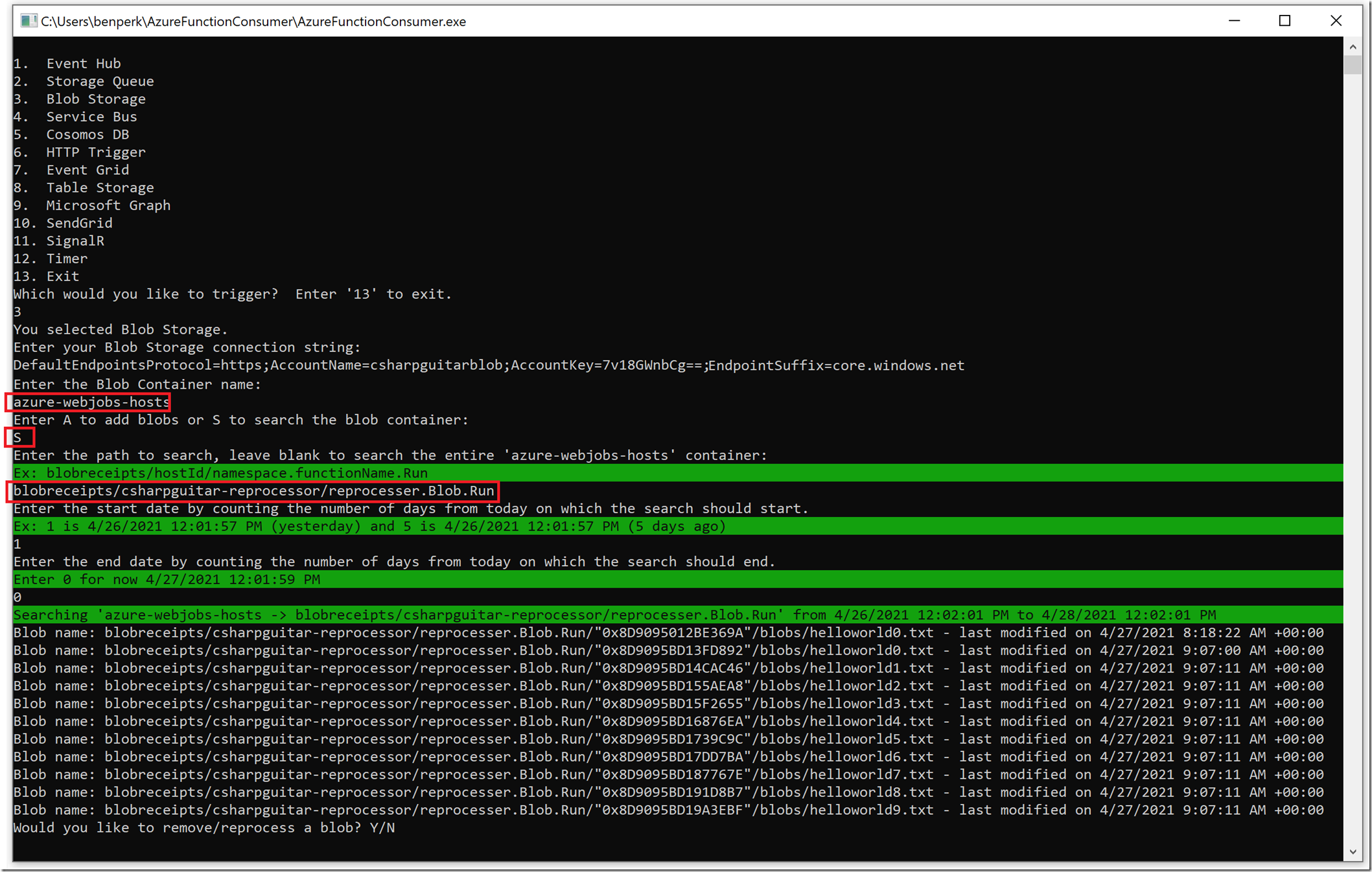

As you see in Figure 4, you can search the blobreceipts directory existing within the azure-webjobs-host for the missing blob (s). If you know about the time when the blob was added to the container then you can project the query more towards that timeframe and limit the results.

Figure 4, reprocess a blob triggered Azure Function, Azure Function Consumer

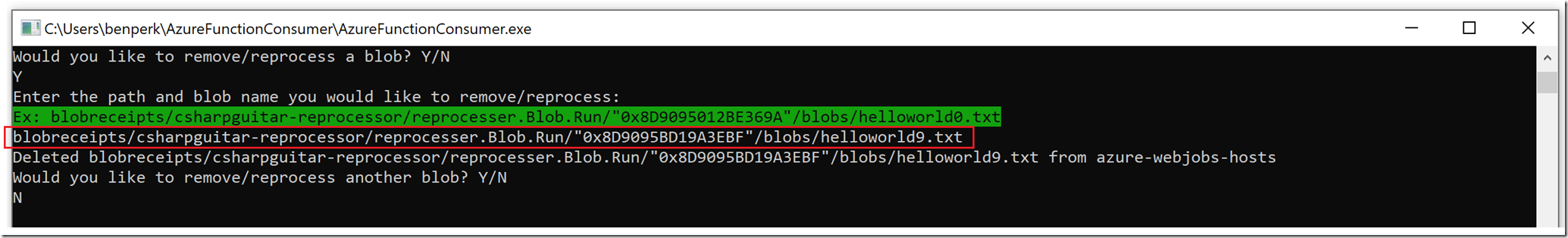

When you find the blob in the list you want reprocessed, enter Y, enter the path similar to that shown in Figure 5 and press enter. ***BE CAREFULL*** because I have not tested every single scenario with different forms and kinds of data, deleting data is dangerous, take a backup before you make any changes if your requirements dictate that. This isn’t production ready code, remember it is a proof of concept. Here is the code snippet that performs the delete.

CloudBlockBlob blockBlob = container.GetBlockBlobReference(path);

await blockBlob.DeleteIfExistsAsync();

Where path is the value you entered in response to the ‘Enter the path and blob name you would like to remove/reprocess:’ question.

Before you delete the record, do some setup so you can see that the blob does get reprocessed once deleted. NOTE: The blob must still exist in the container where the Azure Function is triggered from, this does not have to be the same container as the one which is configured in you AzureWebJobsStorage application setting. It is the one which is configured in your Function.json file.

Figure 5, reprocess a blob triggered Azure Function, Azure Function Consumer

Follow these steps to watch the reprocessing of the blob after deletion from the azure-webjobs-hosts container.

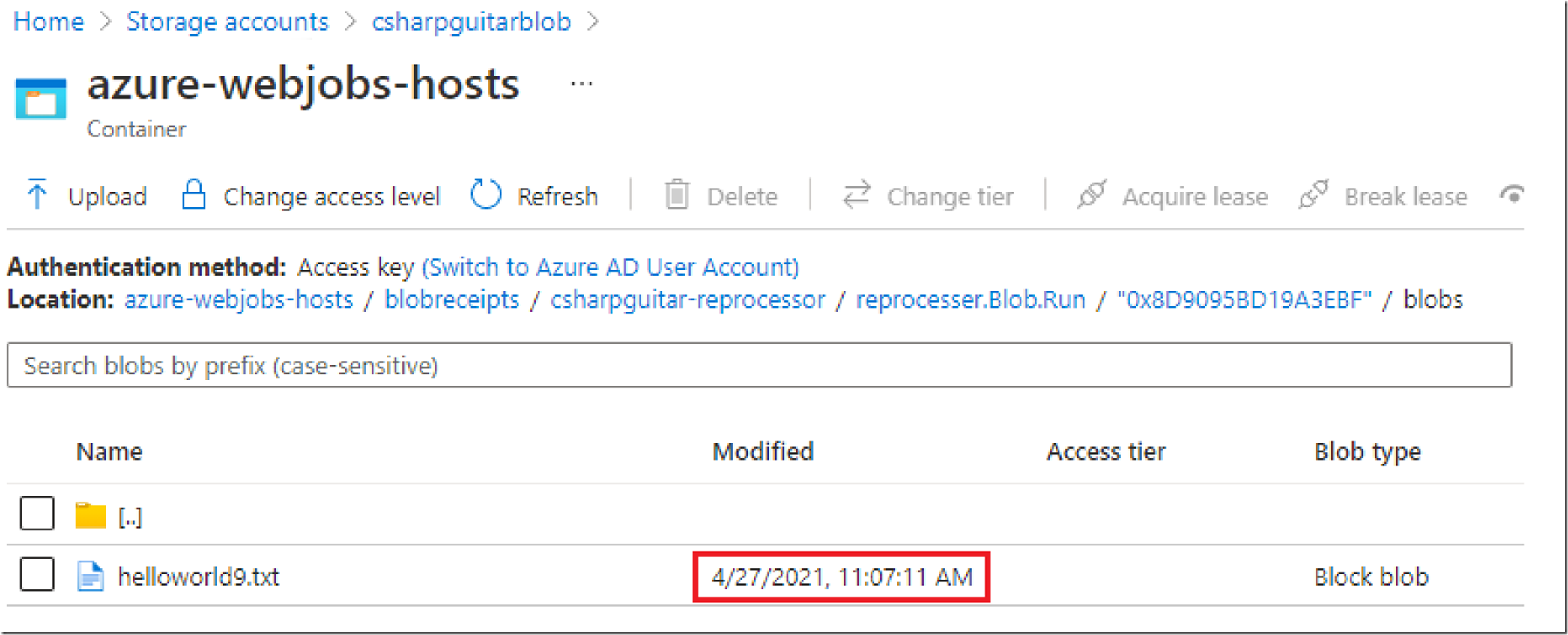

Navigate to the blob in the Azure portal, as shown in Figure 6 and notice the Modified value.

Figure 6, view an Azure Blob in the Azure portal for reprocessing

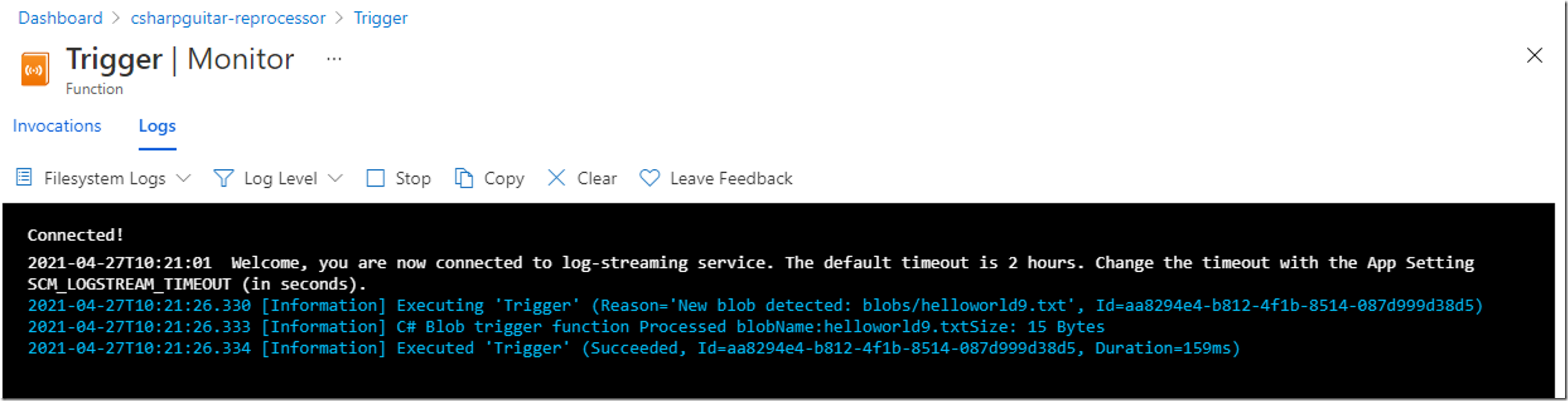

Navigate to the Azure Function which will be triggered when the action is taken to reprocess the blob. When you delete the blob record as shown previously in Figure 5, the Azure Function is triggered, as shown in Figure 7.

Figure 7, reprocessing a blob

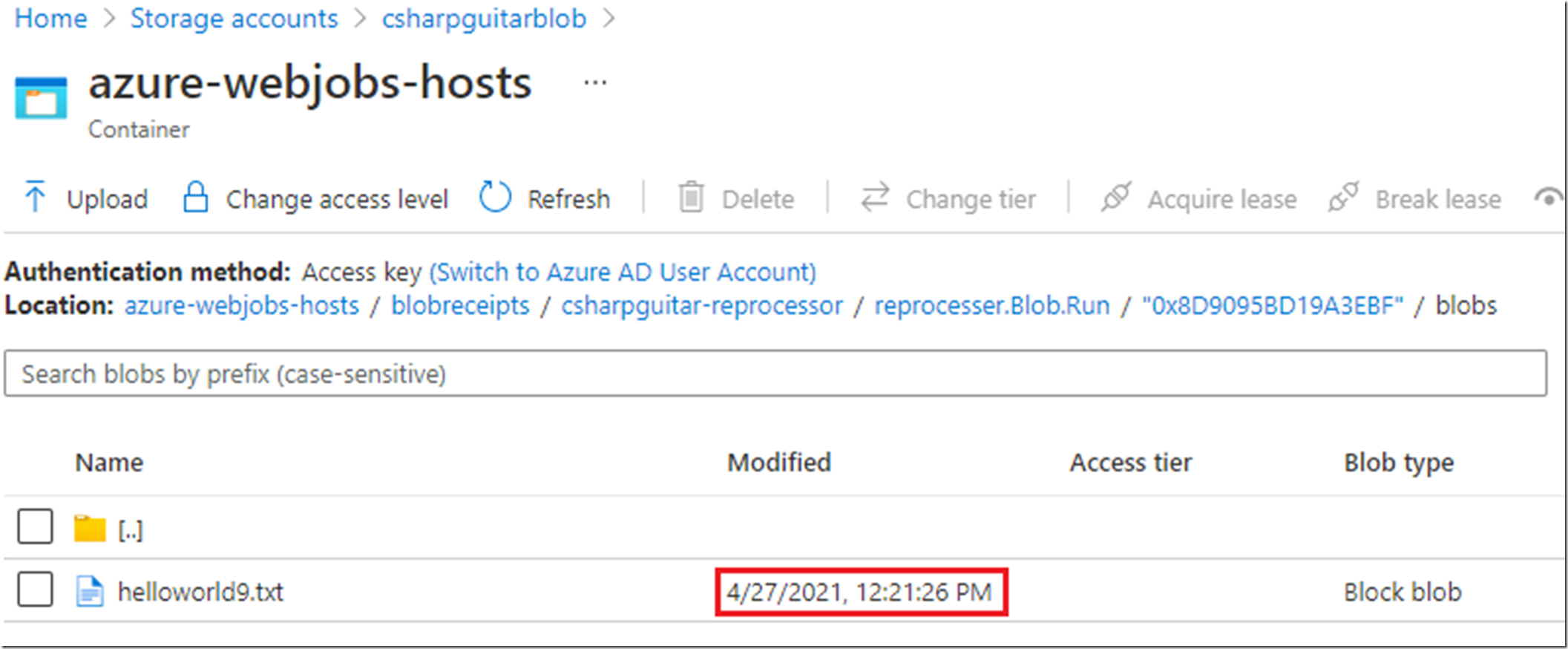

Refresh the record you navigated to in Figure 6, and you will see the Modified stamp is updated as illustrated in Figure 8. This retriggered the Azure Function resulting in the reprocessing. It happened pretty fast too, but I only hade 11 blobs in my container…it can take some time if you have (or had) a massive amount of blobs added to your container.

Figure 8, view an Azure Blob in the Azure portal for reprocessing

Well, that’s about it, that’s how you can get a blob triggered Azure Function to reprocess a single blob. Good luck with that. Here are a few links which might be useful.

- The Azure Function Consumer source code

- The Azure Function Consumer releases (need at least version 1.3.0)

- The ListBlobsSegmentedAsync method

- This was the site which came up when I searched for How to search an Azure Blob Storage container, Search over Azure Blob storage content, it was somewhat helpful but was more of a list of tools versus actually doing it

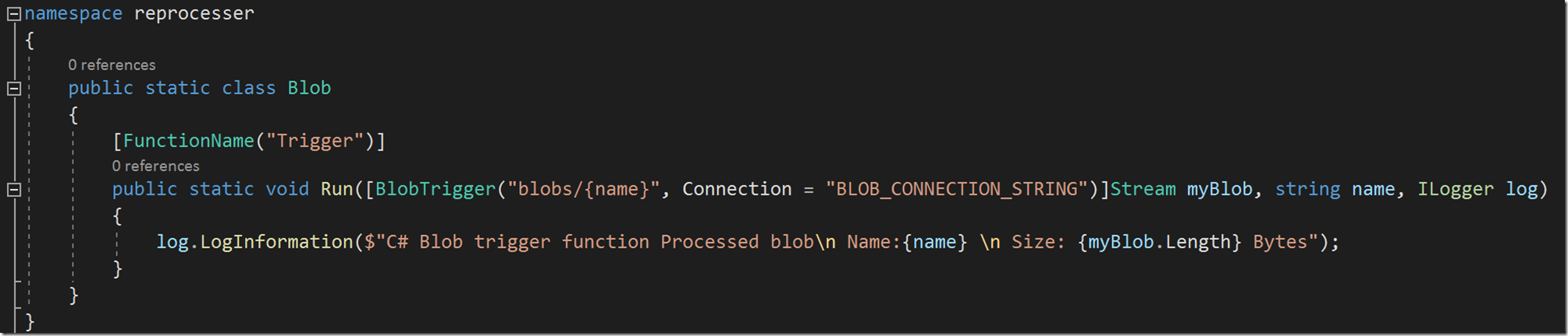

Finally, here if my Azure Function definition so you can see how the structure in the azure-webjobs-hosts maps to it, I.e. the namespace, class and Run() method.

Figure 9, an Azure Function Run method definition example

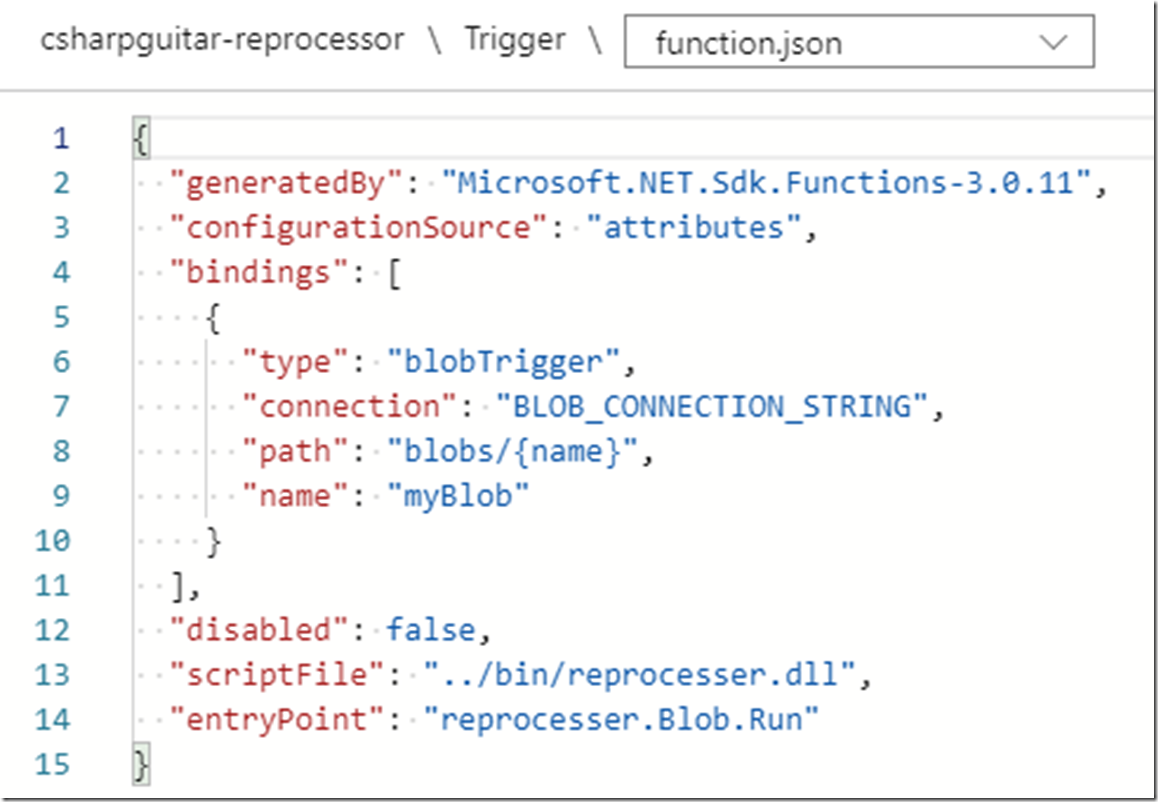

Also, the Function.json content that shows the location to find which blob container which this Azure Function is triggered by. Specifically, the BLOB_CONNECTION_STRING points to an Application Setting with the same name. The value for that setting is the Access Key for the storage container.

Figure 10, an Azure Function function.json blob trigger example

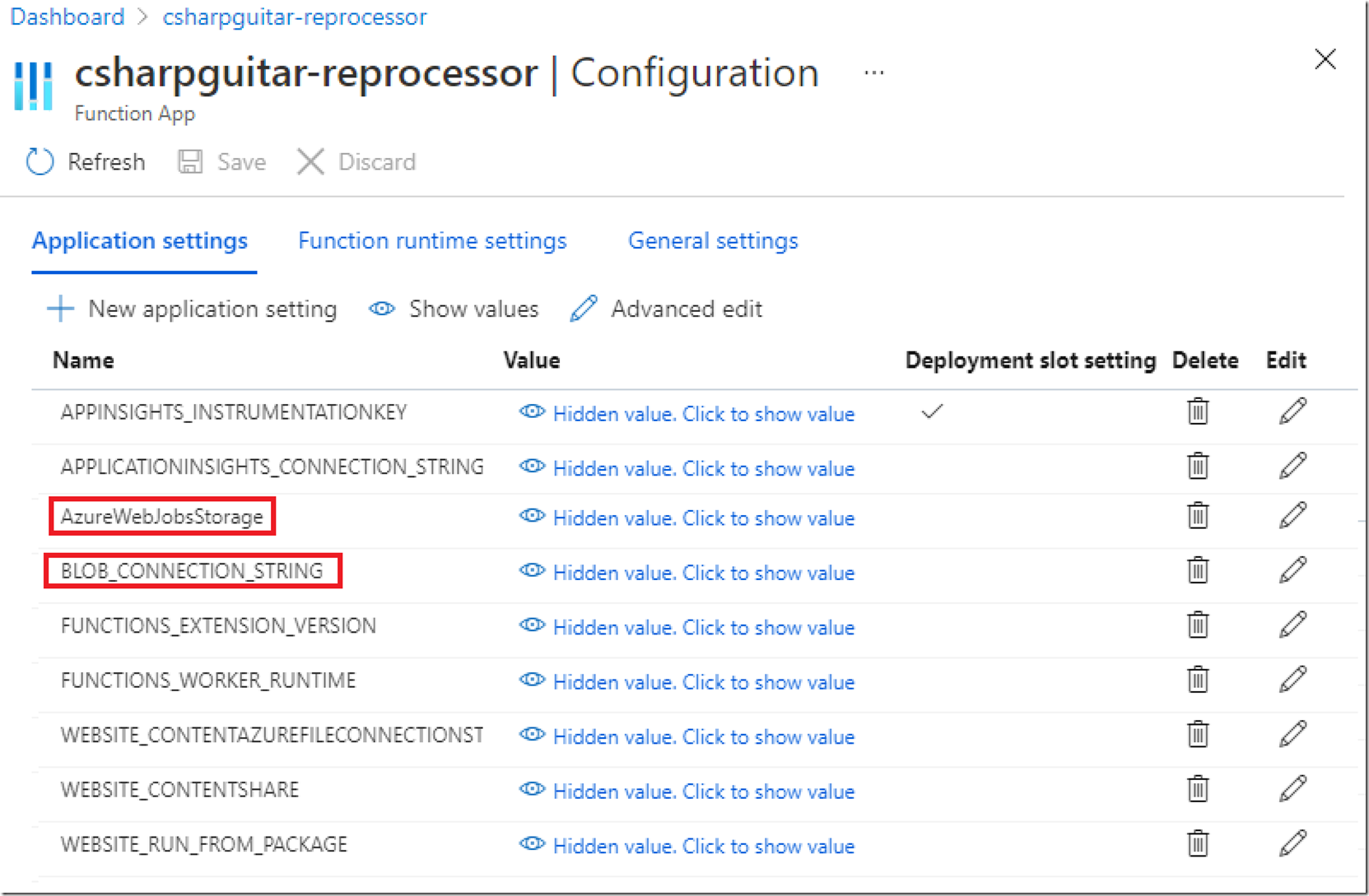

You can see here that I have both application settings, AzureWebJobsStorage and BLOB_CONNECTION_STRING. In my case, they each have a unique endpoint.

Figure 11, Azure Function application settings example

I hope you find this useful.